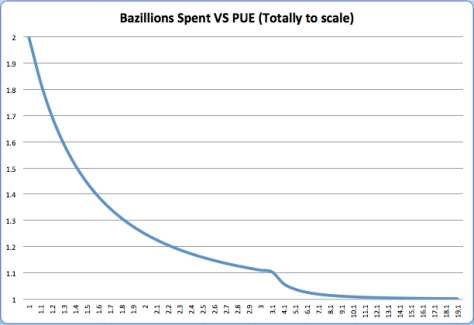

Everyone knows how hard it is to continue to improve PUE. I’ve even included a graphic representation of how spending can influence your PUE: So we all know our struggles, and as the industry matures it becomes easier to increase efficiency under a given budget constraint. But the general idea, that every dollar spent has less impact than the dollar before it (save for some hurtle points) in general will hold true.

So we all know our struggles, and as the industry matures it becomes easier to increase efficiency under a given budget constraint. But the general idea, that every dollar spent has less impact than the dollar before it (save for some hurtle points) in general will hold true.

Now where you choose to plot your dot on the graph is an internal decision. How efficient should be make the center? How important is a lower first cost? In general, my opinion leans toward the more efficient set up, optimizing Net Present Value. But at some point, more efficiency leads toward a lower NPV and that balance point should be determined as a financial decision. In my opinion, many centers have all to often erred toward first cost reductions to meet a quarterly goal as opposed to targeting the long term financial viability of the firm.

Why am I of this opinion? Well we hear the average PUE figures that businesses self report and year after year the figures disappoint. Yet we can see industry leaders, such as Google, operate an entire portfolio with an average of 1.12 while using more unfavorable evaluation methods for PUE than many others. Some might say companies like Google have money to throw around, and they do, but building data centers is still a business decision for them. They are planning for the lifecycle of the facility. If your timeline of financial analysis for a data center is 15 years, then you should evaluate every aspect of the center with that same perspective. You have to buy a generator, you have to buy switchgear, and you have to bring in the connectivity. If you build a business case based on a 15 year outlook on those things, it needs to extend to the equipment efficiencies. Air cooled RTUs vs an evaporative system? The evaporative system might be more than twice as expensive when evaluated on first cost, but may have an extremely positive impact on the project NPV (which is what a real corporate finance decision targeting shareholder value will evaluate).

So I rambled way off course, but to get back on topic, how low can we go on PUE and how low should we try to go. There are some very appealing methods to improve efficiency. Using our ASHRAE recommended bands, evaporative cooling systems like the Schneider Eco-Breeze and the Munters Oasis can achieve very high operating efficiencies in nearly any climate. Line interactive UPSs can operate at 99% efficiencies in EcoMode, cooling towers with well designed economizers can have chillers that run for just a few hours a year, and high efficiency transformers with higher voltage distribution have removed much of the inherent I^2R losses. By optimizing the airpath how much more is there that the MEP engineer can do without endangering server conditions and really racking up the first cost.

Using evaporative cooling systems, however, brings up an interesting point. Evaporation can effectively eliminate nearly all mechanical cooling in many climates when using flexible inlet conditions, there is a trade-off. Further compounding the balance, the use of water to reduce kW in general has a positive impact. This is because the water used to reduce kWh is often more than offset by the water that would be used in the generation of that power at the power plant, and also eliminates the environmental impact of generating that kWh. But in times of a water shortage, or in the case of power generated without the use of water (solar, wind, etc) the balance might shift. Now the balance most operators might do is which has the greatest financial impact (is the cost of the water cheaper than the cost of electricity it saves) but in general we should strive to conserve both resources.

So when pursuing low PUE (and WUE), a facility can be operated fairly efficiently with a few technologies listed (EcoBreeze, Eco-Mode UPS, Occupancy sensors on lighting etc) for relatively low increases of cost relative to the cost of the facility. But getting much further, lets say implementing rack level refrigerant pumping systems or some other emerging technology, can accelerate the cost of a facility rather quickly.

With regard to the question, how low can you go, I think the better question is how low should you go. Maybe we can’t all run at 1.12 like Google, but we can get within spitting distance without breaking the bank, certainly better than the industry average figures reported of 2.00 or greater.

But my real point when I start writing this was that the MEP side of things hits an asymptote at a PUE of 1.00. Once we approach that, all we can do is figure out how to do it more reliably (not a huge gain to be made there), do it with less water (there is some considerable room to improve there), and do it cheaper. But at some point, it becomes air in, air out, water in, water out. What else is there? All the fun stuff will come from the rack level in. More efficient power supplies, more efficient utilization of processor wattage, software that uses makes more effective use of cpu cycles, and virtualization to consolidate and make use of the dormant processing power in existing servers.

At some point I think the rack level improvements will begin reducing the kW usage of the servers AND reduce the need for additional data centers. The ARM chips are racing to improve compute efficiency, Intel continues to make aggressive improvement with their chip efficiencies, and at some point we will look back at the existing infrastructures. And that is where the fun for the MEP guys will lie: figuring out how to maximize the existing asset and utilize the current body of knowledge on operating a center at maximum efficiency. I think that is a much more fun place to play anyway.

PS, I think I will revise this post a few times as I don’t know how well it flows right now…